- June 20, 2024

- Posted by: admin

- Category: BitCoin, Blockchain, Cryptocurrency, Investments

Anthropic has launched Claude 3.5 Sonnet, the latest addition to its AI model lineup, claiming it surpasses previous models and competitors like OpenAI’s GPT-4 Omni. Available for free on Claude.ai and the Claude iOS app, the model is also accessible via the Anthropic API, Amazon Bedrock, and Google Cloud’s Vertex AI. Claude 3.5 Sonnet is priced at $3 per million input tokens and $15 per million output tokens, with a 200,000-token context window.

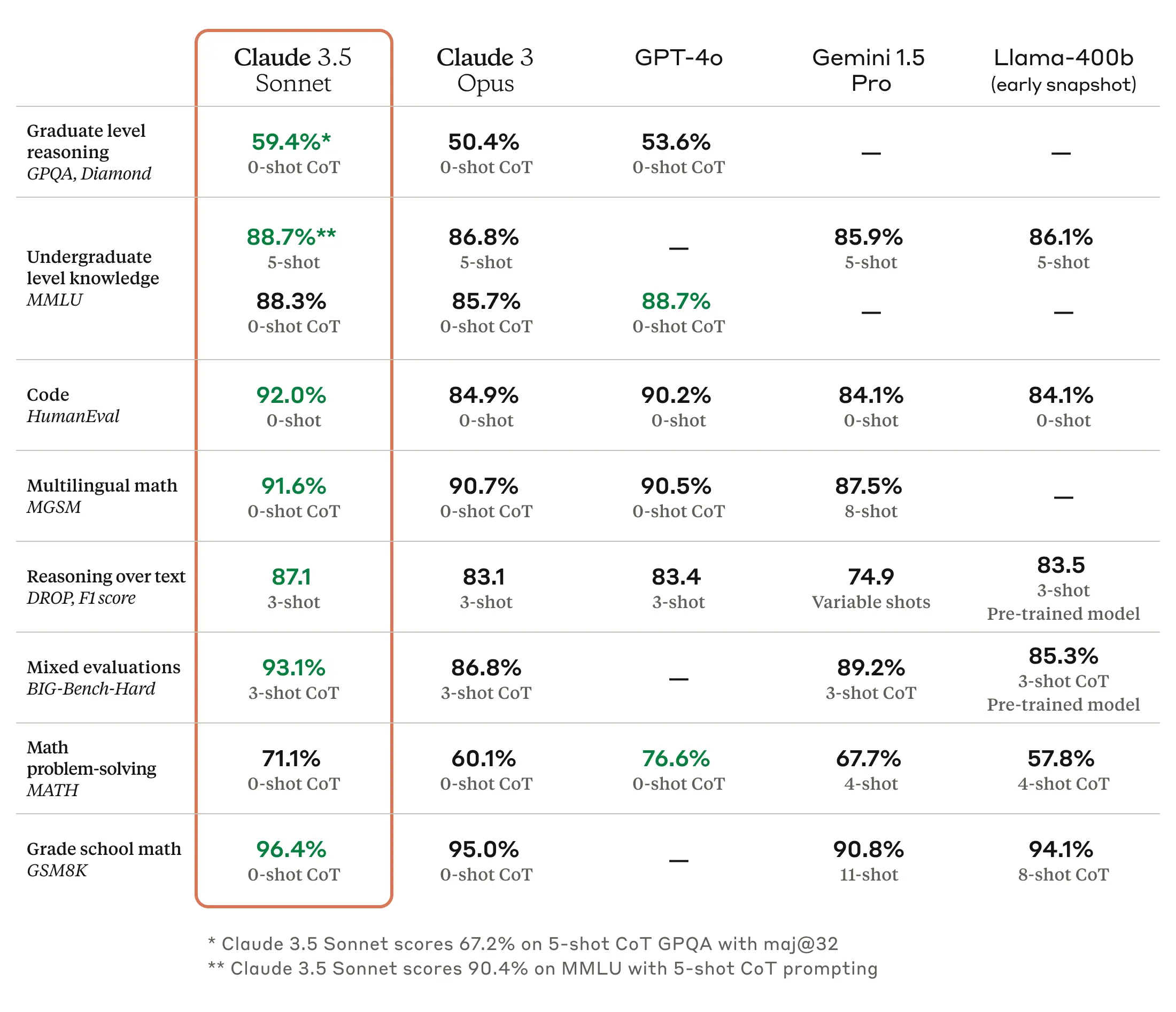

Claude 3.5 Sonnet sets new benchmarks in graduate-level reasoning (GPQA), undergraduate-level knowledge (MMLU), and coding proficiency (HumanEval). It demonstrates significant improvements in understanding nuance, humor, and complex instructions and excels at generating high-quality content with a natural tone. The model operates at twice the speed of Claude 3 Opus, making it suitable for complex tasks like context-sensitive customer support and multi-step workflows.

“In an internal agentic coding evaluation, Claude 3.5 Sonnet solved 64% of problems, outperforming Claude 3 Opus, which solved 38%.”

The model can independently write, edit, and execute code, making it effective for updating legacy applications and migrating codebases. It also excels in visual reasoning tasks, such as interpreting charts and graphs, and can accurately transcribe text from imperfect images, benefiting sectors like retail, logistics, and financial services.

Anthropic has also introduced Artifacts, a new feature on Claude.ai that allows users to generate and edit content like code snippets, text documents, or website designs in real time. This feature marks Claude’s evolution from a conversational AI to a collaborative work environment, with plans to support team collaboration and centralized knowledge management in the future.

Anthropic emphasizes its commitment to safety and privacy, stating that Claude 3.5 Sonnet has undergone rigorous testing to reduce misuse. The model has been evaluated by external experts, including the UK’s Artificial Intelligence Safety Institute (UK AISI), and has integrated feedback from child safety experts to update its classifiers and fine-tune its models. Anthropic assures that it does not train its generative models on user-submitted data without explicit permission.

Looking ahead, Anthropic plans to release Claude 3.5 Haiku and Claude 3.5 Opus later this year, along with new features like Memory, which will enable Claude to remember user preferences and interaction history.

The post Claude 3.5 sets new AI benchmarks, beating GPT-4o in coding and reasoning appeared first on CryptoSlate.